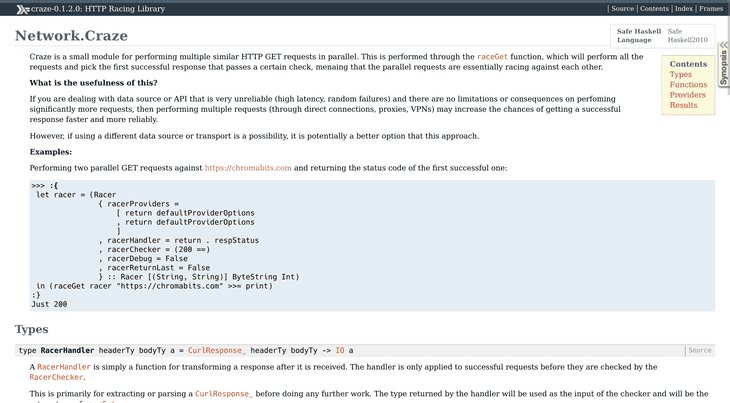

On a previous post, I covered how to setup continuous integration for Haskell projects using a combination of Stack, Travis CI, and Docker. But what about documentation?

If you already have CI setup for your project with Stack and Travis CI, it is

actually pretty easy. In fact, you can make use of GitHub’s Pages feature,

which hosts statics sites based on the content of the gh-pages branch to host

your documentation for free.

The following is a bash script I’ve been using on a couple of projects on

GitHub. It takes care of collecting the documentation and coverage reports

generated while building the application, triggered using Stack’s --haddock

and --coverage respectively.

#!/bin/bash

# .travis/assemble-docs.sh

#

# Make sure you install the Travis CLI and encrypt a GitHub API token with

# access to the repository: `travis encrypt GH_TOKEN=xxxxxxx --add`.

#

# This script is meant to be run during the `after_success` build step.

# Copy haddocks to a separate directory.

mkdir -p ../gh-pages

cp -R "$(stack path --local-doc-root)" ../gh-pages

cp -R "$(stack path --local-hpc-root)" ../gh-pages

cd ../gh-pages

# Set identity.

git config --global user.email "travis@travis-ci.org"

git config --global user.name "Travis"

# Add branch.

git init

git remote add origin https://${GH_TOKEN}@github.com/${TRAVIS_REPO_SLUG}.git > /dev/null

git checkout -B gh-pages

# Push generated files.

git add .

git commit -m "Haddocks updated" git push origin gh-pages -fq > /dev/nullTo do its job, the script relies on some neat tricks/hacks to keep the process as simple as possible:

- Stack: When using

--haddockand--coverageflags, Stack will place documentation and coverage reports on specific paths. You can query these paths usingstack path. On the script above, we use special flags so the output of the program is a single line with the requested path. This avoids having to think about or trying to find where the compiler placed the documentation and related files. - GitHub API Tokens: Managing SSH keys inside a build job is probably something doable but probably not easy. Thankfully, GitHub allows you to push commits to a repository using just an API token.

- Travis CI encrypted variables: This allows us to conveniently store the

aforementioned token in a secure manner and easily access it as an

environment variable while the job runs. We do have to use

> /dev/nullon a couple of places so the key is not leaked on build logs. - Bare Git branch: Given that keeping track of history is not a priority

and could break the build process if somebody else pushed to the

documentation branch, we simply keep a single commit on the

gh-pagesbranch. One can easily do this by initializing a new repository, committing, and force-pushing into the branch.

If you would like to see a public working example, checkout

this repository and its build logs on Travis CI. The resulting

documentation is available as a GitHub pages website and

coverage reports can be found under /hpc on the site.

After using Gentoo for a while, I’ve noticed that while the main portage tree has many up-to-date ebuilds, there are still some that lag behind upstream.

The good news is that, if you’re a bit adventurous, you can add overlays, which, like the name implies, are ebuild trees that are overlaid on top of your local tree.

Overlays usually contain software or package versions that are not available on the main tree. However, it also means that they might not have gone through the same amount of quality assurance than packages from the main tree.

Overlays may be personal or maintained by a small group of developers. They can also exist just locally on your machine or they can be hosted on a git repository.

There’s also tools like layman which can help you manage overlays used on a

machine.

So far, my favorite overlays are gnome, steam-overlay, and haskell, which

provide a recent version of GNOME (3.20), Steam, and up-to-date Haskell

packages respectively.

However, not everything has an ebuild, even if you look on overlays, so you might have to write your own ebuilds.

I’ve been collecting a couple of these and putting them on my own personal overlay hosted on git. This has allowed me to version them and share them across Gentoo machines.

If you are feeling adventurous, you can try it by adding the following file to

/etc/portage/repos.conf/chromabits.conf:

[chromabits]

location = /usr/local/overlay/chromabits

sync-type = git

sync-uri = https://phabricator.chromabits.com/diffusion/PORT/portage.git

auto-sync = yesHowever, I cannot guarantee that they will build, install correctly, or not start a fire given that I lack the time resources to perform something more advanced than “It works on my machine(s)” in terms of QA.

Here a quick summary of a few goodies included:

- iio-sensor-proxy: Allows GNOME to automatically adjust the screen brightness.

- nm-applet: A recent version (1.2) of the NetworkManager applet.

- corebird: A recent version (1.2) of a great GTK+ Twitter client.

- slack: The official Slack client for Linux.

- networkmanager: The latest version of NetworkManager, which has nice features like Wi-Fi power save and MAC address randomization.

- softether-vpn: The SoftEther VPN server and client.

- syncthing-gtk: A GTK GUI for Syncthing.

At my current job, we are starting to adopt Haskell to write some of our backend APIs and, like other projects at the company, we are using Docker for deploying them to staging and production environments.

Working with Haskell required some re-thinking on how we do certain parts of our workflow, but it also led to a much improved image size, with some extra room for improvement.

Unlike PHP or JavaScript, Haskell is a compiled language, meaning that some of previous approaches we were using for building Docker images did not port that well.

For dynamic languages, we followed roughly these steps to build our images:

- Use a base image with packages for the software we need (Debian, Alpine).

- Install server software, such as Nginx, Node.js, and PHP.

- Install language tools and package managers.

- Copy application sources.

- Download dependencies.

- Final optimizations and cleanup.

This is a hybrid approach where we have both build and runtime dependencies on the same Docker image, which is useful because image builders such as Quay.io can automatically build the docker image for you on every commit without the need of an additional step in the CI pipeline, and this also has the slight advantage of having enough tooling inside a container for light debugging.

As a result, the image size is not the smallest it could be since there is a lot of things that are not commonly used during runtime. However, the added convenience sort of out weighs the issues of having a larger image for these cases.

For Haskell projects, though, things are bit different, and not in a bad way:

The community has made an excellent build tool called Stack. stack takes

care of mostly everything related to setting up your project: Installing GHC,

pulling dependencies, building, testing, coverage reports, documentation. When

paired with Nix, it can even pull non-Haskell dependencies for reproducible

builds.

If we try to do a hybrid image approach like above using Stack, we mainly have to do the following on a Dockerfile:

- Download and install Stack.

- Copy application sources.

- Install GHC (

stack setup). - Compile project (

stack build).

This works, but it is extremely slow and the resulting images are huge (+800MB!).

On every Docker build, stack would have to download and install GHC, and then

proceed to download and compile every dependency of the project, which tended

to a good 30 minutes on a Quay.io worker node.

When developing locally, you only have to go through this process every now and

then because most of it is cached in directories such as ~/.stack and

.stack-work.

Looking for faster build times and smaller images, I decided to experiment with splitting the build and runtime aspects of the project.

The build part was already setup since we were using Travis CI for running unit and some integration tests. When compared to basic Docker image builders, Travis CI has the clear benefit of having a cache that can be reused across builds without too much work. This cache allowed us to keep our built dependencies across builds, which reduced the build time to under 5 minutes.

This enable caching of Stack builds, you just need to add the work directories

to the cache section of .travis.yml:

cache:

directories:

- '$HOME/.stack'

- .stack-workSo, getting the runtime half working meant taking the resulting build from the previous steps and building a Docker container with enough dependencies and data files for running the application.

FPComplete has a great article out there on how to create minimal Docker images for Haskell projects.

The main difficulty with this process is that Haskell programs are not built statically by default, meaning that you have to identify all the libraries the binary is linked against and include them in the final runtime image.

In order to keep things simple, I decided to stick to using a base image, which

we could use to pull in any dependencies we don’t have, like libcurl-dev.

I initially tried to use Alpine, since its known for being one of the smallest images out there. However, getting a Haskell program running in it was not trivial since it requires cross-compiling GHC.

So I settled with debian, which is a larger image, but has almost everything

we need out of the box.

Building a Docker image on Travis CI is a fairly simple process. Pushing it to a registry and correctly tagging it was the annoying part. After a couple of hours of trial and error, I made a small shell script for authenticating with the registry and pushing a tagged image matching the current git tag and branch.

This script is called on the after-success step of the Travis CI build:

#!/bin/bash

set -euo pipefail

IFS=$'\n\t'

docker build -t myapp .

# If this is not a pull request, update the branch's docker tag.

if [ $TRAVIS_PULL_REQUEST = 'false' ]; then

docker tag myapp quay.io/myorg/myapp:${TRAVIS_BRANCH/\//-} \

&& docker push quay.io/myorg/myapp:${TRAVIS_BRANCH/\//-};

# If this commit has a tag, use on the registry too.

if ! test -z $TRAVIS_TAG; then

docker tag myapp quay.io/myorg/myapp:${TRAVIS_TAG} \

&& docker push quay.io/myorg/myapp:${TRAVIS_TAG};

fi

fiAs a result, we now have Docker images for our Haskell projects that are about 80 MB, which is not terrible, but can definitely be improved on.

The next steps for me are investigating how to make our images even smaller by using a smaller base image, and automate the deployment of development and staging environments by having Travis CI notify a scheduler that a new image has been built.

I’m including some of my scripts and an example Dockerfile on a GitHub Gist for reference. You will most likely have to modify them to meet your needs.