Coming from OS X, I’ve grown accustomed to Tunnelblick, which is one of the best OpenVPN clients for the platform. It is not perfect and there are many commercial offerings out there that have a much nicer user interface, however Tunnelblick gets the job done and it’s open source.

On Linux, the story is a bit different. Most distributions come with NetworkManager, which is, as the name implies, a daemon for managing network connections. For most systems, it is the component that takes care of connecting to Wi-Fi networks, setting up an Ethernet connection when you plug in the cable, and even 3G/4G modems.

NetworkManager has support for plugins, which has led it to support many VPN protocols, including OpenVPN!

When trying to figure out how to setup an OpenVPN client on Linux, it was pleasant to find that it not only is integrated with the main networking daemon, but it also supported on the UI-side, where most settings can be tweaked.

However, Tunnelblick still had something I couldn’t find how to do using NetworkManager alone: Connecting to the VPN automatically and reconnecting on cases where the connection is dropped.

For me, this is a must have feature for VPN clients, given that I tend to roam a lot with my laptop and can’t remember to connect every time it connects to a new network.

Some initial digging led me to an Arch Linux wiki page describing how to write a script which sort-of achieves what I’m looking for. However, the approach seemed brittle and insecure, due to the fact that you have to make the connection available to other users on the system, and in some cases write connection secrets in plaintext.

After a while, I attempted to start writing a small daemon that would monitor D-Bus and respond to NetworkManager events by determining if a VPN connection should be started or stopped. An initial version was capable of determining if the VPN connection is active. However, due to lack of free time to work on it and the complexity of keeping track of the state of the machine, I decided to put it on hold.

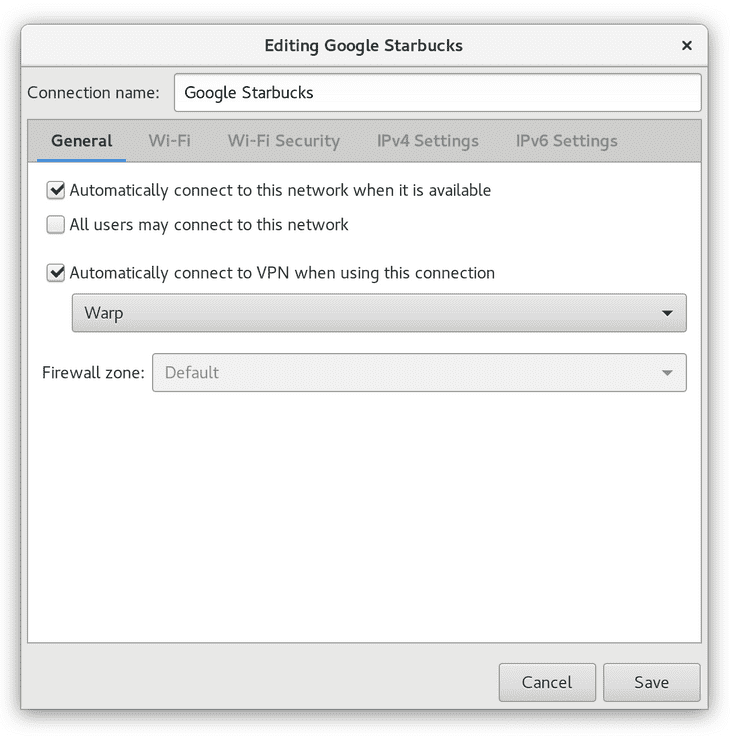

While working on this project, I did discover that NetworkManager does have some of this functionality built-in. It turns out you can specify a VPN to connect to as a requirement for some connections to succeed:

On Gentoo, this configuration can be accessed using nm-connection-editor,

which can be installed using the gnome-extra/nm-applet package.

This is working great so far, but it does required some manual configuration for every new connection you setup, which can be annoying if you roam through many Wi-Fi networks.

In the future, I might resume work on the D-Bus approach in order to automate this a bit more. I would love it if my laptop simply did not trust any network and automatically attempted to connect to a VPN. It would also be nice if this is only attempted after a hotspot login is shown. For now, however, this should be enough.

Not too long ago I left OS X and installed Fedora on my laptop. Now, over the past few days, I’ve been working on bootstrapping a Gentoo install from within my Fedora setup.

Unlike other Linux distributions, Gentoo does not have an installer. It is expected that you setup and install the system yourself. Yes. It is tedious and definitely takes longer than following a setup wizard, but on the other hand you gain some knowledge on how Linux works and end up with a custom system.

The learning factor was what convinced me to give Gentoo a try.

Given the particular route I decided to take, the Gentoo Handbook only helped on certain parts of the process. On a normal setup, you start with a live image of Gentoo containing just enough tools to install the system. It is also assumed that you will start with an empty disk or one that you don’t mind erasing.

On my case, I have an SSD that has a Fedora and OS X partitions, which are both encrypted using each operating system’s built-in encryption methods. The Linux side consisted of a LUKS partition containing a LVM setup.

Thus, the first step was to figure out how to make space for Gentoo while also

avoid breaking the existing systems. Having LVM setup, certainly helped here.

I shrunk my Fedora root (/) while keeping my home partition (/home) intact.

After that was done, I created a new partition for the root Gentoo filesystem and followed the section of the handbook on how to download the Stage3 archive and chrooting into the environment.

Once, chroot-ed, you can sort of begin using Gentoo and install packages using Portage. You can even run X programs if you have an X server running on the host. However, the goal here was to also boot into Gentoo directly. A chroot-ed environment meant that the system is still running using the Fedora kernel. A big part of installing Gentoo is building your own kernel and then rebooting into it.

Building a bootable kernel wasn’t too hard. I installed the kernel sources

using Portage, configured it using make menuconfig, and compiled it. Once it

was finished. I copied over to my /boot partition so that GRUB could find it.

A few test boots showed that the system was booting correctly (and very fast too). Unfortunately, once booted nothing happened because the disk partition is encrypted using LUKS.

Getting LUKS and LVM to work was bit painful due to how long it took me to find a working solution.

My initial attempt resulted in me trying to setup an initramfs using Fedora’s

dracut tool, which was available on the Gentoo repository and mentioned in

many guides on the distribution’s wiki. It seemed logical given that it was the

same tool being used by the Fedora install, so getting it to work with Gentoo

should have only consisted of building a similar image but pointing to the

Gentoo partition.

That did not work out well. I was simply not able to get past the disk

unlocking and mounting process. I think it may have been just trying to make

many things work together: EFI, systemd, plymouth, cryptsetup, and lvm.

So, I continued with the other option presented by the Gentoo wiki,

genkernel. This is a tool used to automate the process of building a kernel

in Gentoo, but it also supports automatically building an initramfs. I

initially tried to avoid it given that I wanted to build the kernel myself as a

learning exercise. However, after installing it, I was pleased to find that

building an initramfs did not involved building a kernel with genkernel.

A few key things to enable on my setup was the LUKS and LVM options on

/etc/genkernel.conf, and adding dolvm to my kernel command line on GRUB.

BAM! It booted, asked for my password, and dropped my into a login shell.

I still had to go back to Fedora and chroot a couple of more times until I

got the right network drivers compiled. It was nice to find out that plugging

in a Thunderbolt Ethernet adapter worked and did not crashed the kernel like it

does on Fedora.

Another thing I didn’t realize immediately was that the genkernel initramfs

does not use systemd by default, so I had to add that to the kernel command

line too.

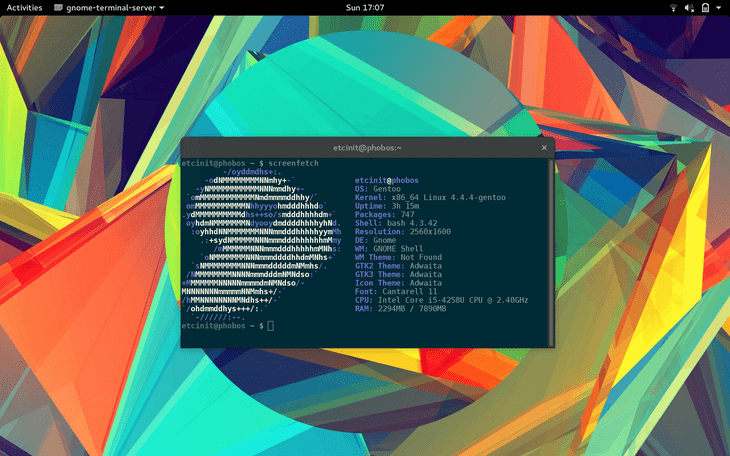

Then I continued to install software and drivers, but this time from within Gentoo. GNOME took a good 4-6 hours to compile, mainly due to WebKit taking so long.

Once I started GDM, NetworkManager, and the bluetooth service using

systemctl, I finally felt like I had a fully working system on my hands.

Everything worked, except one major peripheral, the trackpad: Apparently, the

usbhid driver was claiming the device before the right driver, so the

keyboard worked, but the trackpad was dead.

After a few hours of debugging, I gave up and decided to try compiling the latest kernel available (4.4.4 vs 4.1.15), and after rebooting the trackpad began working, including multitouch support!

Lastly, I switched from X to Wayland, given that X had an odd issue with some

letters not rendering after the laptop came back from sleep. Adding Wayland

support simply consisted of adding wayland wayland-compositor xwayland egl to

my USE flags, and then recompiled the affected packages. After another

reboot, “GNOME on Wayland” appeared as an option on the GDM login screen.

Summary

In general, I think I’m happy with my current build and will attempt to use it as my main driver for the next few weeks. If it turns out to not have any major issues, I’ll remove my fedora partition to clear up some space.

The good

- Worked, almost, out-of-the-box: I was surprised by the amount of hardware that simply just worked out of the box by enabling the right kernel modules: GPU, Keyboard, Sound, Lid, Keyboard Backlight, Mic, Media Keys, USB, Thunderbolt.

- Needed some extra work: Wi-Fi, Trackpad.

- Closing the laptop actually puts it to sleep. No workarounds needed like in Fedora. Wayland is slightly better at this since it doesn’t turn on the screen for a few seconds while closed like Xorg does.

- Power consumptions seems to be slightly better than Fedora, especially after

enabling all the toggles on

powertop. - The system boots really fast. Around, maybe, 20-40 seconds from GRUB to GDM?

- The Gentoo repositories are generally closer to upstream than other

distributions, and I’m also growing to like Portage’s

USEkeyword feature. - Learned a lot about how modern Linux systems are setup.

The bad

- It’s not for everyone. Many users probably just want an OS that works out of the box.

- The compile time of some packages can be very long (I’m looking at you WebKit).

- Many commercial software packages are only distributed as

debs orrpms.

Working with Hakyll has been great so far, but it seems the fun ends right after your site is generated and copied over to the remote server. From there, you have to deal with Nginx or Apache configuration files for the final touches, such as redirections, cache policies, or additional headers.

I’ve seen applications, such as XMonad, which employ Haskell scripts in-lieu of configuration files, and it’s generally an enjoyable experience due to the added flexibility and customization it provides.

With that in mind, wouldn’t it be nice if the web server was in Haskell as well? After all, Hakyll has a preview function that works well enough. So, it shouldn’t be too hard to replicate that and add some custom logic on top of it.

Well, that’s exactly what I was wondering during the past few days, and (perhaps not too) surprisingly, it only took half a day’s worth of work to get a working prototype.

To get the job done, I added a new target to my Hakyll project’s cabal file,

and included warp, wai-static-app, and a bunch of WAI middleware

(wai-extra) as dependencies. The rest was merely a process of putting all

the pieces together and ensuring the site behaved as expected.

The result

- A fast static site server.

- Support for HTTP 2.0 and HTTPS.

- Customized configurations for production, staging, and development.

- A more complete solution than

hakyll server, since the site behaves exactly like it would on production. - A clear path for adding more logic in the future (API, Proxy, etc).

The server code is a few orders of magnitude larger than a simple configuration file, but it’s also under 200 lines of code.

{-# LANGUAGE OverloadedStrings #-}

import Control.Concurrent (forkIO)

import qualified Data.ByteString as BS (ByteString, pack)

import Data.Maybe (fromMaybe, mapMaybe)

import Data.Monoid ((<>))

import Data.String (fromString)

import qualified Data.Text as T (Text, concat, pack)

import qualified Data.Text.Encoding as TE (encodeUtf8)

import Network.Wai (Application, Middleware,

pathInfo)

import Network.Wai.Application.Static (defaultWebAppSettings,

ss404Handler,

ssAddTrailingSlash,

ssIndices, ssMaxAge,

ssRedirectToIndex,

staticApp)

import Network.Wai.Handler.Warp (defaultSettings, run,

setPort)

import Network.Wai.Handler.WarpTLS (runTLS,

tlsSettingsChain)

import Network.Wai.Middleware.AddHeaders (addHeaders)

import Network.Wai.Middleware.ForceDomain (forceDomain)

import Network.Wai.Middleware.ForceSSL (forceSSL)

import Network.Wai.Middleware.Gzip (def, gzip)

import Network.Wai.Middleware.RequestLogger (logStdout)

import Network.Wai.Middleware.Vhost (redirectTo)

import Safe (lastMay)

import System.Environment (lookupEnv)

import WaiAppStatic.Types (MaxAge (MaxAgeSeconds),

toPiece)

-- | The core application.

-- It serves files from `_site` which is where Hakyll will place the generated

-- site.

staticSite :: Maybe String -> Application

staticSite path = staticApp

(defaultWebAppSettings $ fromString $ fromMaybe "_site" path)

{ ssIndices = mapMaybe (toPiece . T.pack) ["index.html"]

, ssRedirectToIndex = False

, ssAddTrailingSlash = True

, ss404Handler = Just redirectApp

, ssMaxAge = MaxAgeSeconds 3600

}

-- | 404 handler.

-- We will redirect users to a 404 page if we can't locate the resource they

-- are looking for.

redirectApp :: Application

redirectApp req sendResponse = sendResponse $ redirectTo "/"

-- | Gzip compression middleware.

gzipMiddleware :: Middleware

gzipMiddleware = gzip def

-- | Domain redirection middleware.

-- When the site is live, we want to redirect users to the right domain name

-- regarles of whether they arrive from a www. domain, the server's IP address

-- or a spoof domain which is pointing to this server.

domainMiddleware :: Middleware

domainMiddleware = forceDomain

(\domain -> case domain of

"localhost" -> Nothing

"chromabits.com" -> Nothing

_ -> Just "chromabits.com")

-- | Common headers middleware.

headersMiddleware :: Middleware

headersMiddleware = addHeaders

[ ("X-Frame-Options", "SAMEORIGIN")

, ("X-XSS-Protection", "1; mode=block")

, ("X-Content-Type-Options", "nosniff")

]

-- | Strict Transport Security middleware.

stsHeadersMiddleware :: Middleware

stsHeadersMiddleware = addHeaders

[("Strict-Transport-Security", "max-age=31536000; includeSubdomains")]

-- | Content Security Policy middleware.

-- Here we add the CSP header which includes the policies for this blog.

cspHeadersMiddleware :: Middleware

cspHeadersMiddleware = addHeaders

[("Content-Security-Policy", TE.encodeUtf8 $ glue policies)]

where

glue :: [T.Text] -> T.Text

glue [] = "default-src 'none'"

glue [x] = x

glue xs = T.concat $ map (\x -> T.concat [x, "; "]) (init xs) ++ [last xs]

policies :: [T.Text]

policies = [ "default-src 'self'"

, "script-src 'self' 'unsafe-inline' https://use.typekit.net"

<> " https://cdn.mathjax.org https://connect.facebook.net"

<> " https://*.twitter.com https://cdn.syndication.twimg.com"

<> " https://gist.github.com"

<> " https://*.google-analytics.com/ga.js"

, "img-src 'self' https: data: platform.twitter.com"

, "font-src 'self' data: https://use.typekit.net"

<> " https://cdn.mathjax.org"

, "style-src 'self' 'unsafe-inline' https://use.typekit.net"

<> " platform.twitter.com https://assets-cdn.github.com"

, "frame-src https://www.youtube.com https://www.slideshare.net"

<> " staticxx.facebook.com www.facebook.com"

]

-- | De-indefify middleware.

-- Redirects any path ending in `/index.html` to just `/`.

deindexifyMiddleware :: Middleware

deindexifyMiddleware app req sendResponse =

if lastMay (pathInfo req) == Just "index.html"

then sendResponse $ redirectTo newPath

else app req sendResponse

where

newPath :: BS.ByteString

newPath = TE.encodeUtf8 $ processPath oldPath

processPath :: [T.Text] -> T.Text

processPath xs = case xs of

[] -> "/"

_ -> T.concat $ map prefixSlash xs

oldPath :: [T.Text]

oldPath = init $ pathInfo req

prefixSlash :: T.Text -> T.Text

prefixSlash x = T.concat ["/", x]

-- | Serves a WAI Application on the specified port.

-- The target port is printed to stdout before hand, which can be useful for

-- debugging purposes.

listen :: Int -> Application -> IO ()

listen port app = do

-- Inform which port we will be listening on.

putStrLn $ "Listening on port " ++ show port ++ "..."

-- Serve the WAI app using Warp

run port app

-- | Serves a WAI Application on the specified port.

-- The target port is printed to stdout before hand, which can be useful for

-- debugging purposes.

listenTLS :: Int -> Application -> IO ()

listenTLS port app = do

certPath <- lookupEnv "BLOG_TLS_CERT"

chainPath <- lookupEnv "BLOG_TLS_CHAIN"

keyPath <- lookupEnv "BLOG_TLS_KEY"

let tlsSettings = tlsSettingsChain

(fromMaybe "cert.pem" certPath)

[fromMaybe "fullchain.pem" chainPath]

(fromMaybe "privkey.pem" keyPath)

let settings = setPort port defaultSettings

-- Inform which port we will be listening on.

putStrLn $ "Listening on port " ++ show port ++ " (TLS)..."

-- Serve the WAI app using Warp

runTLS tlsSettings settings app

-- | The entry point of the server application.

main :: IO ()

main = do

stage <- lookupEnv "BLOG_STAGE"

path <- lookupEnv "BLOG_PATH"

let liveMiddleware = logStdout

$ cspHeadersMiddleware

$ headersMiddleware

$ domainMiddleware

$ forceSSL

$ deindexifyMiddleware

$ gzipMiddleware

$ staticSite path

-- Depending on the stage we will choose a different set of middleware to

-- apply to the application.

case fromMaybe "dev" stage of

-- "Production"

"live" -> do

forkIO $ listenTLS 443 $ stsHeadersMiddleware liveMiddleware

listen 80 liveMiddleware

"staging" -> do

forkIO $ listenTLS 8443 liveMiddleware

listen 8080 liveMiddleware

-- "Development"

_ -> listen 9090 (logStdout

$ headersMiddleware

$ deindexifyMiddleware

$ gzipMiddleware

$ staticSite path

)Lets dive in!

Now let’s dive into the details. The code above might not be too long, but its doing a few interesting things that you might not usually get out of a simple static site server:

forkIO

forkIO (from Control.Concurrent) was one of the most interesting things I

encountered while writing the server, and it also one of the last.

If you are writing a server to run on a single port, you don’t generally stop and ask yourself how you would modify it to run on multiple port simultaneously.

Well, if you expect your site to use TLS and HTTP 2.0, you will most likely want to also redirect users from the insecure site to the secure one. This means you will need to listen on both, ports 80 and 443.

My initial approach to this was very naive. I concluded that I could simply just run two servers by passing a flag at startup. However, this seemed cumbersome and error-prone.

The real solution lead to learn a new part of Haskell that I had largely ignore before: Concurrency.

I was please to find that forkIO allows you to create a lightweight thread

that can execute another IO () function.

With it, I was able to spawn both servers at the same time:

case fromMaybe "dev" stage of

-- "Production"

"live" -> do

forkIO $ listenTLS 443 $ stsHeadersMiddleware liveMiddleware

listen 80 liveMiddlewareredirectTo

Hidden away on the Network.Wai.Middleware.Vhost module, you can find the

redirectTo function, which is really useful for sending redirect (301)

responses to clients.

On the server, I use it to define the 404 error handler:

redirectApp :: Application

redirectApp req sendResponse = sendResponse $ redirectTo "/"forceDomain and forceSSL

To redirect users from an insecure (HTTP) site to a secure (HTTPS) site, the

you can use the forceSSL.

To redirect users to the right domain name, you can use forceDomain, however,

you need to pass in a custom function which specifies when and to which domains

users should be redirected to.

I prefer to use the naked domain for my blog, so I redirect anything that is

not chromabits.com:

domainMiddleware :: Middleware

domainMiddleware = forceDomain

(\domain -> case domain of

"localhost" -> Nothing

"chromabits.com" -> Nothing

_ -> Just "chromabits.com")No more index.html

One thing I don’t like about static sites is having .html extensions on the

URL. In Hakyll, I managed to partially work around this problem by simply

making sure that most paths result in index.html (e.g. /archive/ is

actually /archive/index.html). However, you could still technically get to

the index.html path if you visit it directly.

With this server, I wanted to add an additional redirect rule that would

redirect users away from paths with index.html. It is implemented on the

code above as deindexifyMiddleware.

In the future, I might consider writing a smarter middleware capable of

handling more paths (e.g. /archive would simply be /archive.html).

Middleware stacks

Something that was not immediately obvious to me from reading the wai

documentation was how to chain middleware together. By looking at the types, I

eventually realized that a Middleware takes in an Application and returns a

new Application which is wrapped with the middleware. This means that you can

simply feed each middleware to the next one and end up with an application that

is wrapped in the entire stack.

type Middleware = Application -> ApplicationHowever, you will need to pay special attention to the order in which they are applied. For me, it was important that the logger middleware was at the top of the stack so that every request was logged.

-- Everything is logged.

site = logStdout $ forceSSL $ app

-- Some redirects will not be logged.

site = forceSSL $ logStdout $ appOverkill? Perhaps. Fun? Definitely.

Given that I’m trying to learn more about Haskell, I’m constantly trying to find small projects to tackle with the language.

Writing your own server for a simple static site might sound overkill and, most likely, take longer than dealing with a couple of Apache configuration files.

That is, if your goal is to just get a site up, just stick to a pre-built server, but if you are looking to learn, writing your own might be worth your time.